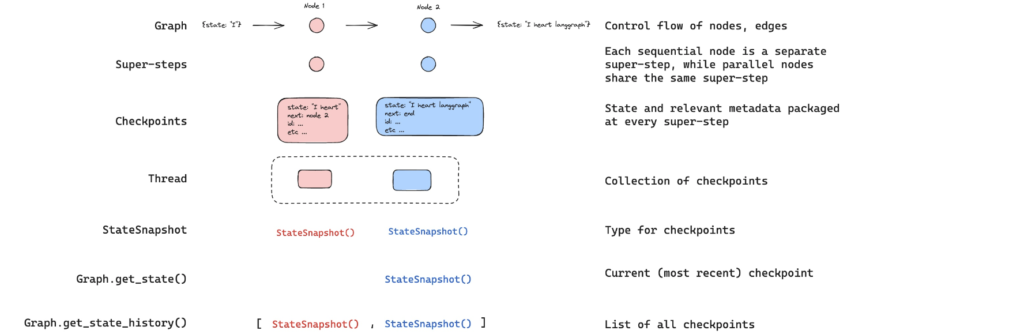

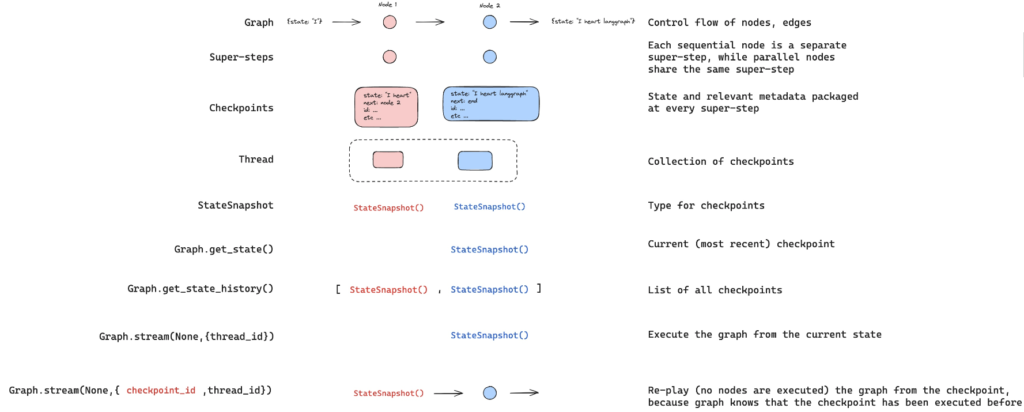

Time travel in LangGraph is a game-changer for debugging workflows! It allows you to replay, modify, and re-execute flows from predefined checkpoints, making troubleshooting and optimization effortless. In this blog, we’ll walk you through how to do time travel in LangGraph to streamline your development process. Keep reading to master this powerful feature!

Why use Time travel in LangGraph?

In LangGraph, the primary purpose of the time travel concept is debugging. It allows you to view, replay, and even fork from past states. By utilizing time travel for states, you can gain deeper insights into each stage of the process.

Get browsing history on State

We can get the overall history of the state in the LangGraph. Before applying the function for getting the state’s history messages let’s create one ReAct LangGraph workflow that has maths tools for addition, multiplication, and division.

from langchain_openai import ChatOpenAI

from IPython.display import Image, display

from langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import MessagesState

from langgraph.graph import START, END, StateGraph

from langgraph.prebuilt import tools_condition, ToolNode

from langchain_core.messages import AIMessage, HumanMessage, SystemMessage

def multiply(a: int, b: int) -> int:

"""Multiply a and b.

Args:

a: first int

b: second int

"""

return a * b

# This will be a tool

def add(a: int, b: int) -> int:

"""Adds a and b.

Args:

a: first int

b: second int

"""

return a + b

def divide(a: int, b: int) -> float:

"""Divide a by b.

Args:

a: first int

b: second int

"""

return a / b

tools = [add, multiply, divide]

llm = ChatOpenAI(model="gpt-4o")

llm_with_tools = llm.bind_tools(tools)

# System message

sys_msg = SystemMessage(content="You are a helpful assistant tasked with performing arithmetic on a set of inputs.")

# Node

def assistant(state: MessagesState):

return {"messages": [llm_with_tools.invoke([sys_msg] + state["messages"])]}

# Graph

builder = StateGraph(MessagesState)

# Define nodes: these do the work

builder.add_node("assistant", assistant)

builder.add_node("tools", ToolNode(tools))

# Define edges: these determine the control flow

builder.add_edge(START, "assistant")

builder.add_conditional_edges(

"assistant",

# If the latest message (result) from assistant is a tool call -> tools_condition routes to tools

# If the latest message (result) from assistant is a not a tool call -> tools_condition routes to END

tools_condition,

)

builder.add_edge("tools", "assistant")

memory = MemorySaver()

graph = builder.compile(checkpointer=MemorySaver())

# Show

display(Image(graph.get_graph(xray=True).draw_mermaid_png()))

Now, let’s run the workflow.

# Input

initial_input = {"messages": HumanMessage(content="Multiply 2 and 3")}

# Thread

thread = {"configurable": {"thread_id": "1"}}

# Run the graph until the first interruption

for event in graph.stream(initial_input, thread, stream_mode="values"):

event['messages'][-1].pretty_print()

================================ Human Message =================================

Multiply 2 and 3

================================== Ai Message ==================================

Tool Calls:

multiply (call_ikJxMpb777bKMYgmM3d9mYjW)

Call ID: call_ikJxMpb777bKMYgmM3d9mYjW

Args:

a: 2

b: 3

================================= Tool Message =================================

Name: multiply

6

================================== Ai Message ==================================

The result of multiplying 2 and 3 is 6.

Now let’s look at the history of the state by using get_state_history function of state.

all_states = [s for s in graph.get_state_history(thread)] print(len(all_states)) #---OUTPUT 5

There are 5 processes in the state. Suppose if you want to see what happened on the 3rd snapshot, we can look into it.

print(all_states[3])

# Output

#StateSnapshot(values={'messages': [HumanMessage(content='Multiply 2 and 3', additional_kwargs={}, response_metadata={}, id='19785895-6536-4f3e-8825-e5185ce753b6')]}, next=('assistant',), config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1efe6e1c-9dd7-6989-8000-6507f8b2f9b4'}},

# metadata={'source': 'loop', 'writes': None, 'thread_id': '1', 'step': 0, 'parents': {}}, created_at='2025-02-09T12:31:30.053953+00:00', parent_config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1efe6e1c-9dcf-6fa3-bfff-53e653a72298'}},

# tasks=(PregelTask(id='41a2673f-a798-cc40-c251-5a685e02ec5f',

#name='assistant', path=('__pregel_pull', 'assistant'), error=None, interrupts=(), state=None, result={'messages': [AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_IWZeXpt8c2FKYY11fH0a5G8v', 'function': {'arguments': '{"a":2,"b":3}', 'name': 'multiply'}, 'type': 'function'}], 'refusal': None},

#response_metadata={'token_usage': {'completion_tokens': 18, 'prompt_tokens': 131, 'total_tokens': 149, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}},

#'model_name': 'gpt-4o-2024-08-06', 'system_fingerprint': 'fp_50cad350e4', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run-570aad9a-eca8-4316-8e43-067363e183f9-0', tool_calls=[{'name': 'multiply', 'args': {'a': 2, 'b': 3}, 'id': 'call_IWZeXpt8c2FKYY11fH0a5G8v', 'type': 'tool_call'}],

#usage_metadata={'input_tokens': 131, 'output_tokens': 18, 'total_tokens': 149, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}})]}),))

Replaying the state

Replay is another good approach for debugging. By replaying, you can execute the graph from the previously executed checkpoint.

Suppose you want to execute the workflow from the 3rd snapshot where we are passing the Human message of Multiply 2 and 3.

to_replay = all_states[-2]

print(to_replay.values)

#Output

#{'messages': [HumanMessage(content='Multiply 2 and 3', id='4ee8c440-0e4a-47d7-852f-06e2a6c4f84d')]}

print(to_replay.config)

#{'configurable': {'thread_id': '1',

# 'checkpoint_ns': '',

# 'checkpoint_id': '1ef6a440-a003-6c74-8000-8a2d82b0d126'}}

To replay it from here, just pass this config back to our agent. Our Agent knows that this checkpoint has already been executed. So it will not do the LLM call but will just display the historical output.

for event in graph.stream(None, to_replay.config, stream_mode="values"):

event['messages'][-1].pretty_print()

# OUTPUT

================================ Human Message =================================

Multiply 2 and 3

================================== Ai Message ==================================

Tool Calls:

multiply (call_SABfB57CnDkMu9HJeUE0mvJ9)

Call ID: call_SABfB57CnDkMu9HJeUE0mvJ9

Args:

a: 2

b: 3

================================= Tool Message =================================

Name: multiply

6

================================== Ai Message ==================================

The result of multiplying 2 and 3 is 6.

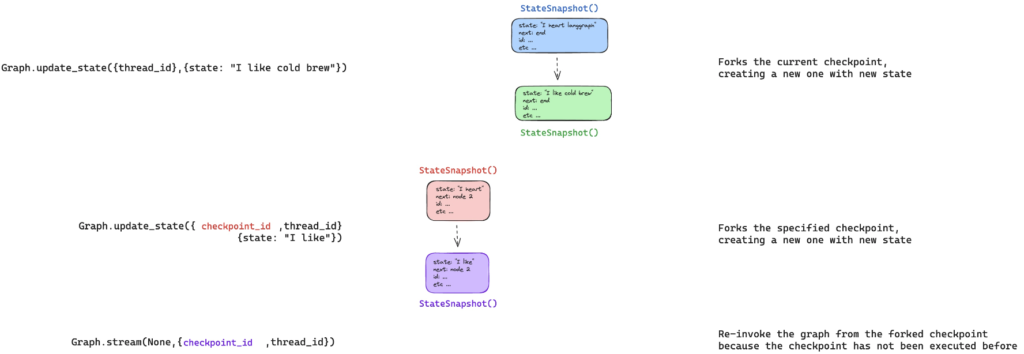

Forking

In replay, the system executes the graph from the provided state’s snapshot. However, if you need to modify the snapshot and re-run the graph from that updated state, you can use forking to achieve this. Let’s see it by changing our multiplication message.

to_fork = all_states[-2] to_fork.values["messages"] # [HumanMessage(content='Multiply 2 and 3', id='4ee8c440-0e4a-47d7-852f-06e2a6c4f84d')]

Now, let’s modify the message by using update_state

fork_config = graph.update_state(

to_fork.config,

{"messages": [HumanMessage(content='Multiply 5 and 3',

id=to_fork.values["messages"][0].id)]},

)

In the above, we have passed two things, the config of the state snapshot that we want to update, and the message.

Since the message uses a reducer, it will append the content instead of replacing it. Thus we are also passing id argument with HumanMessage do replacement.

This process has created the forked checkpoint. Now when we run the graph, it will actually run rather than simply re-playing.

for event in graph.stream(None, fork_config, stream_mode="values"):

event['messages'][-1].pretty_print()

================================ Human Message =================================

Multiply 5 and 3

================================== Ai Message ==================================

Tool Calls:

multiply (call_KP2CVNMMUKMJAQuFmamHB21r)

Call ID: call_KP2CVNMMUKMJAQuFmamHB21r

Args:

a: 5

b: 3

================================= Tool Message =================================

Name: multiply

15

================================== Ai Message ==================================

The result of multiplying 5 and 3 is 15.