In AI workflows, automating tasks with LLMs (Large Language Models) is powerful, but some decisions require human oversight. Human-in-the-loop (HITL) ensures human intervention at critical decision points, improving accuracy, handling sensitive cases, and providing better control. This blog explores how to implement human-in-the-loop in LangGraph to enhance decision-making in AI-powered workflows.

Why is Human-in-the-loop required?

Using LangGraph, we can create any type of workflow using custom functions as nodes. The functions could contain APIs, tools, and any other technical logic. That’s why sometimes it requires human approval if the workflow is very critical, where human-in-the-loop helps.

human-in-the-loop helps with:

(1) Approval – We can interrupt our agent, surface state to a user, and allow the user to accept an action

(2) Debugging – We can rewind the graph to reproduce or avoid issues

(3) Editing – You can modify the state

How to implement human-in-the-loop

There are two methods to implement human-in-the-loop.

- Using Breakpoints

- using interrupt function

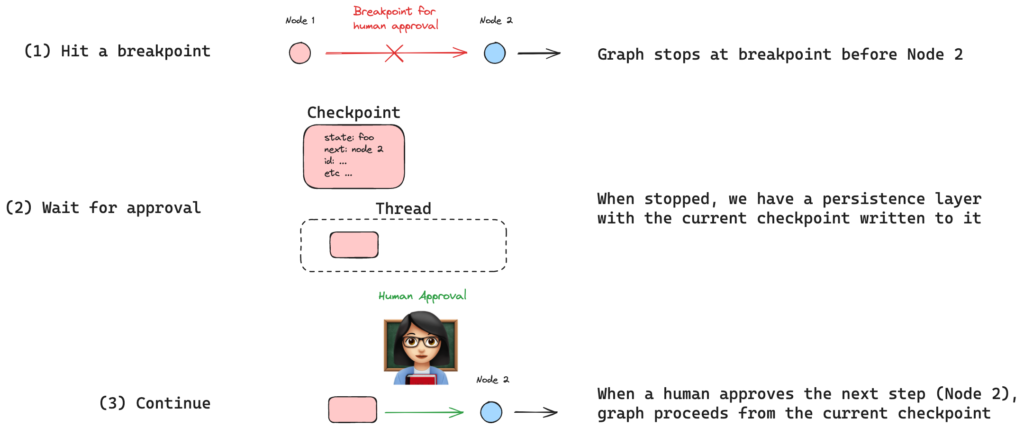

1. Using Breakpoints

As the name suggests, breakpoints help execute the graph until the defined node, then it waits for human approval.

Let’s assume that are concerned about tool use: we want to approve the agent to use any of its tools.

All we need to do is simply compile the graph with interrupt_before=["tools"] where tools are our tools node.

This means that the execution will be interrupted before the node tools, which execute the tool call.

from IPython.display import Image, display

from langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import MessagesState

from langgraph.graph import START, StateGraph

from langgraph.prebuilt import tools_condition, ToolNode

from langchain_core.messages import AIMessage, HumanMessage, SystemMessage

from langchain_openai import ChatOpenAI

def multiply(a: int, b: int) -> int:

"""Multiply a and b.

Args:

a: first int

b: second int

"""

return a * b

# This will be a tool

def add(a: int, b: int) -> int:

"""Adds a and b.

Args:

a: first int

b: second int

"""

return a + b

def divide(a: int, b: int) -> float:

"""Divide a by b.

Args:

a: first int

b: second int

"""

return a / b

tools = [add, multiply, divide]

llm = ChatOpenAI(model="gpt-4o")

llm_with_tools = llm.bind_tools(tools)

# System message

sys_msg = SystemMessage(content="You are a helpful assistant tasked with performing arithmetic on a set of inputs.")

# Node

def assistant(state: MessagesState):

return {"messages": [llm_with_tools.invoke([sys_msg] + state["messages"])]}

# Graph

builder = StateGraph(MessagesState)

# Define nodes: these do the work

builder.add_node("assistant", assistant)

builder.add_node("tools", ToolNode(tools))

# Define edges: these determine the control flow

builder.add_edge(START, "assistant")

builder.add_conditional_edges(

"assistant",

# If the latest message (result) from assistant is a tool call -> tools_condition routes to tools

# If the latest message (result) from assistant is a not a tool call -> tools_condition routes to END

tools_condition,

)

builder.add_edge("tools", "assistant")

memory = MemorySaver()

graph = builder.compile(interrupt_before=["assistant"], checkpointer=memory)

# Show

display(Image(graph.get_graph(xray=True).draw_mermaid_png()))

Let’s see this in action.

# Input

initial_input = {"messages": "Multiply 2 and 3"}

# Thread

thread = {"configurable": {"thread_id": "1"}}

# Run the graph until the first interruption

for event in graph.stream(initial_input, thread, stream_mode="values"):

event['messages'][-1].pretty_print()

================================ Human Message ================================= Multiply 2 and 3

Let’s check the current state of the graph the get the clear understanding.

state = graph.get_state(thread)

print(state)

#StateSnapshot(values={'messages': [HumanMessage(content='Multiply 2 and 3', id='e7edcaba-bfed-4113-a85b-25cc39d6b5a7')]}, next=('assistant',), config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1ef6a412-5b2d-601a-8000-4af760ea1d0d'}},

#metadata={'source': 'loop', 'writes': None, 'step': 0, 'parents': {}}, created_at='2024-09-03T22:09:10.966883+00:00', parent_config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1ef6a412-5b28-6ace-bfff-55d7a2c719ae'}},

#tasks=(PregelTask(id='dbee122a-db69-51a7-b05b-a21fab160696', name='assistant', error=None, interrupts=(), state=None),))

#get the next coming state

print(state.next)

## ('assistant',)

As you can see, the workflow hasn’t executed the assistant node because we interrupted the workflow just before the assistant node.

Now, there are two things you can do:

i. Execute the graph without changing the state’s values

If you are fine with the state’s values, then resume the workflow from where it stopped by passing None in the graph.stream.

for event in graph.stream(None, thread, stream_mode="values"):

event['messages'][-1].pretty_print()

================================== Ai Message ==================================

Tool Calls:

multiply (call_eqlctYi3bluPXUgdW0Ac6Abr)

Call ID: call_eqlctYi3bluPXUgdW0Ac6Abr

Args:

a: 2

b: 3

================================= Tool Message =================================

Name: multiply

6

================================== Ai Message ==================================

ii. Execute the graph by changing the state’s values

You can also add/modify the state variable’s value before executing the workflow further by using update_state.

graph.update_state(

thread,

{"messages": [HumanMessage(content="No, I want to multiply 4 and 3!")]},

)

for event in graph.stream(None, thread, stream_mode="values"):

event['messages'][-1].pretty_print()

================================ Human Message =================================

No, I want to multiply 4 and 3!

================================== Ai Message ==================================

Tool Calls:

multiply (call_Mbu8MfA0krQh8rkZZALYiQMk)

Call ID: call_Mbu8MfA0krQh8rkZZALYiQMk

Args:

a: 4

b: 3

================================= Tool Message =================================

Name: multiply

12

Now, we’re back at the assistant, which has our breakpoint.

We can again pass None to proceed.

for event in graph.stream(None, thread, stream_mode="values"):

event['messages'][-1].pretty_print()

================================= Tool Message ================================= Name: multiply 12 ================================== Ai Message ================================== 4 multiplied by 3 equals 12.

2. using interrupt function

We have seen how breakpoints help to implement human-in-the-loop. You can also use the interrupt method to do the same. The interrupt method behaves like an input function of Python. Let’s see this in action.

from langgraph.graph import MessagesState

from langgraph.types import interrupt, Command

from langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import MessagesState

from langgraph.graph import StateGraph

from langgraph.types import interrupt

#define inturrupt function

def human_node(state: MessagesState):

value= interrupt(f"What should I say about {state['messages']}")

return {'messages':[{"role":"assistant","content":value}]}

#build a graph

checkpointer = MemorySaver()

graph_builder = StateGraph(MessagesState)

graph_builder.add_node(human_node)

graph_builder.set_entry_point("human_node")

graph=graph_builder.compile(checkpointer=checkpointer)

Our graph is ready, let’s test it out!

config = {"configurable":{"thread_id":"1"}}

graph.invoke({"messages":[{"role":"user","content":"hi!"}]},config=config)

# OUTPUT

#{'messages': [HumanMessage(content='hi!', additional_kwargs={}, response_metadata={}, id='f00b7a64-2e5d-48f2-b2a0-aa95e2122f5f')]}

Let’s look into the current graph state to get a clear idea.

curr_state=graph.get_state(config)

StateSnapshot(values={'messages': [HumanMessage(content='hi!', additional_kwargs={}, response_metadata={}, id='0b43ab99-50a5-437f-a41e-d1bc740d0e6e')]}, next=('human_node',),

config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1efe166d-241e-64d2-8000-7d0a1a9989ad'}}, metadata={'source': 'loop', 'writes': None, 'thread_id': '1', 'step': 0, 'parents': {}},

created_at='2025-02-02T13:08:40.222204+00:00', parent_config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1efe166d-2416-6d86-bfff-da8b8b9a24da'}}, tasks=(PregelTask(id='4e431558-f86a-ce57-2d40-63a37c56b30d',

name='human_node', path=('__pregel_pull', 'human_node'), error=None,

interrupts=(Interrupt(value="What should I say about [HumanMessage(content='hi!', additional_kwargs={}, response_metadata={}, id='0b43ab99-50a5-437f-a41e-d1bc740d0e6e')]",

resumable=True, ns=['human_node:4e431558-f86a-ce57-2d40-63a37c56b30d'], when='during'),), state=None, result=None),))

As you can see, there is a key called interrupts, which means our graph is interrupted! Now, let’s resume it by providing custom input that, by our logic, will be treated as an AI message.

graph.invoke(Command(resume="Good Evening"), config=config)

{'messages': [HumanMessage(content='hi!', additional_kwargs={}, response_metadata={}, id='f00b7a64-2e5d-48f2-b2a0-aa95e2122f5f'),

AIMessage(content='Good Evening', additional_kwargs={}, response_metadata={}, id='41f2d68e-a0e1-4fb9-b697-9f71b82ccb6d')]}

Done, your input is registered in the workflow’s state. Here is a official guide on interrupts if you want to know more.

Conclusion

Human in the loop is a very critical aspect of any Agentic workflow that requires human approval before proceeding further. As you saw, the Breakpoints and interrupt utility allow us to add or modify the variable values in a state.

Also Read: How to use Send function in LangGraph for effective dataflow management