Classification machine learning algorithms use probability to predict the correct class, such as Logistic regression, SVC, decision tree classifier, Lasso regression, etc. Naive Bayes is the supervised machine learning algorithm used for classification problems. Let’s see how it works.

Principle of Naive Bayes classifier

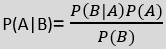

The Naive Bayes classifier is one of the fastest and simplest algorithms in machine learning. The Naive Bayes classifier works on the Bayes theorem. The Bayes theorem is as follows:

The Bayes theorem works on conditional probability. We can derive the probability happening of event A if B has already occurred. The A is called the hypothesis and the B is called the evidence. One assumption is made here that all features or predictors are independent of each other. So one feature can not affect another. Hence it is called naive.

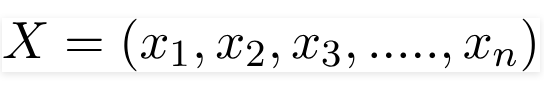

Suppose here X is over independent features and y whether to play golf or not.

So the X will be,

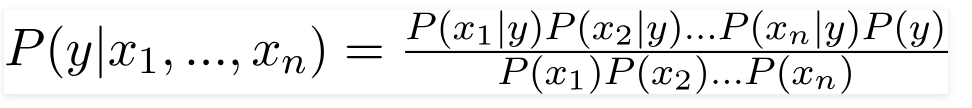

By applying the chain rule, we can get,

Here the below part P(x1)P(x2)…P(xn) will be the same for every classification problem. and the conditional probability will be directly proportional to the upper part of the equation.

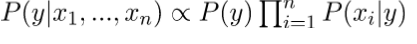

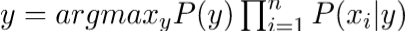

If we simplify the last formula then,

By Naive Bayes, we are calculating the probability of each target and at last, we have to select the class which has the highest probability among other classes. Therefore we have to max value from the formula.

Example of Naive Bayes classifier

| No | Outlook | Temperature | Play Golf |

|---|---|---|---|

| 0 | Rainy | Hot | No |

| 1 | Rainy | Hot | No |

| 2 | Overcast | Hot | Yes |

| 3 | Sunny | Mild | Yes |

| 4 | Sunny | Cool | Yes |

| 5 | Sunny | Cool | No |

| 6 | Overcast | Cool | Yes |

| 7 | Rainy | Mild | No |

| 8 | Rainy | Cool | Yes |

| 9 | Sunny | Mild | Yes |

| 10 | Rainy | Mild | Yes |

| 11 | Overcast | Mild | Yes |

| 12 | Overcast | Hot | Yes |

| 13 | Sunny | Mild | No |

Here the 2 features Outlook and Temperature are given and we have to predict on the basis of these features like if we can play golf or not.

We have to make a frequency table and likelihood table for each feature.

Frequency table for weather:

| Weather | Yes | No | P(Yes) | P(No) |

|---|---|---|---|---|

| Rainy | 2 | 3 | 2/9 | 3/5 |

| Sunny | 3 | 2 | 3/9 | 2/5 |

| Overcast | 4 | 0 | 4/9 | 0/5 |

| Total | 9 | 5 |

The above table is very much important to derive the conditional probability. From the table, if you are asked Probability of Yes with respect to Sunny P(Sunny/Yes) will be 2/9.

Now we have to create the table just as above for the Temperature feature also.

| Temperature | Yes | No | P(Yes) | P(No) |

|---|---|---|---|---|

| Hot | 2 | 2 | 2/9 | 2/5 |

| Mild | 4 | 2 | 4/9 | 2/5 |

| Cold | 3 | 1 | 3/9 | 1/5 |

| Total | 9 | 5 |

Now last we will calculate the probability for the target column which is Play Golf.

| Play Golf | P(Yes) | P(No) |

|---|---|---|

| Yes/No | 9/14 | 5/14 |

And That’s it. Now you can have all conditional probabilities that are required in Bayes theorem.

Now let’s take one example. If you have given that the weather is Sunny and the temperature is hot then it is good to play golf or not?

You have to calculate a probability for Yes and a probability for No.

P(Yes | Sunny, Hot)=P(Yes) * P(Sunny/Yes)* P(Hot/Yes)

=9/14*3/9*2/9

= 1/21

= 0.04

P(No| Sunny, Hot)=P(No) * P(Sunny/No)* P(Hot/No)

=5/14*2/5*2/5

== 0.05

Now we have to find the percentage of each case.

Percentage of P(Yes | Sunny, Hot)= 0.04/0.04+0.05*100=44%

Percentage of P(No| Sunny,Hot)= 0.05/0.04+0.05*100=55%

Now you can see. Here the probability of No is higher than Yes. So if the weather is Sunny and Hot then the answer will be No. So that’s how the naive Bayes classifier works.

The naive Bayes classifier is affected if you have an imbalanced dataset. There are methods to resolve that like upsampling and downsampling. To know more about how to deal with an imbalanced dataset click here.

Implementation of Naive Bayes Classifier in Python

You can take any dataset to fit the Naive Bayes Classifier. Here we took the titanic dataset. Naive Bayes classifier is also in the sklearn library just like other algorithms.

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.naive_bayes import GaussianNB

df = pd.read_csv("titanic.csv")

#Preprocessing

df.drop(['PassengerId','Name','SibSp','Parch','Ticket','Cabin','Embarked'],axis=1,inplace=True)

X = df.drop('Survived',axis=1)

y = df.Survived

dummies = pd.get_dummies(X.Sex)

X = pd.concat([X,dummies],axis=1)

X.drop(['Sex','male'],axis=1,inplace=True)

X["Age"] = X.Age.fillna(X["Age"].mean())

#splitting data for training and testing

X_train, X_test, y_train, y_test = train_test_split(X,y,test_size=0.3)

#Fitting GaussianNB because it has continous values in some features

model = GaussianNB()

model.fit(X_train,y_train)

print(model.predict(X_test))

You can also use grid search CV to optimize the model and select the model which gives better precision, recall, or f1 score.

Types Of Naive Bayes Classifier

1. Multinomial Naive Bayes

It is mainly used for multi-class classification problems as we saw in the above example of whether to play golf or not. This is also used in News classification to decide in which category the article is belonging(i.e Sports, Politics, Technology, etc).

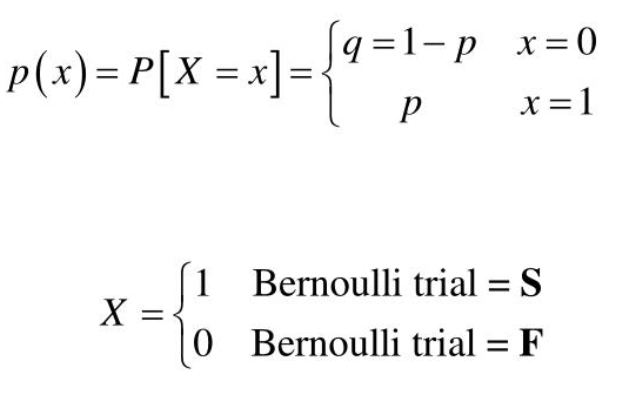

2. Bernoulli Naive Bayes

Bernouli naive bayes is similar to the multinomial Naive Bayes. The only difference in this is that in the predictors, there are only boolean values. for example, if there was wind or not.

Here p is the probability of success and q is the probability of failure (1-p). If you want to learn about this then go here.

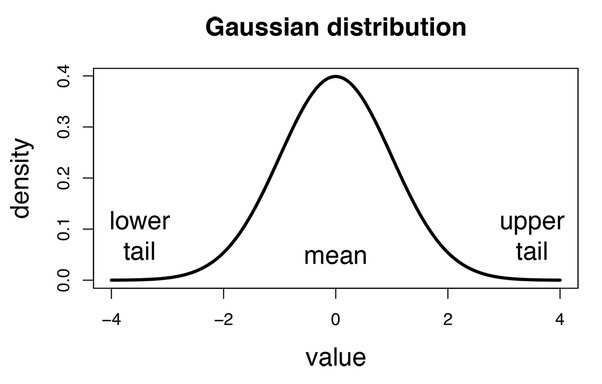

3. Gaussian Naive Bayes

When there is continuous value in the predictors then Gaussian Naive Bayes is used. Gaussian naive Bayes assumes that each class follows the normal distribution. If you want to learn more about it then go here.

Advantages of Naive Bayes Classifier

- Very useful in text classification problems.

- Fast and easy algorithm to predict the class of datasets.

- used for both binary and multi-class classification.

Disadvantages of Naive Bayes Classifier

- It cannot learn the relationship among predictors. Because it assumes that every feature is independent and they have no effect on other features.

Applications of Naive Bayes Classifier

- Face recognition

- Weather prediction

- Medical diagnosis

- Spam detection

- Sentimental analysis

- Language identification

- News Classification

Also Read: SMOTEENN: A tool to handle imbalanced datasets in machine learning